They generate impressive results, but their inner workings are often a black box. And that’s not just a design flaw, it’s a security risk.

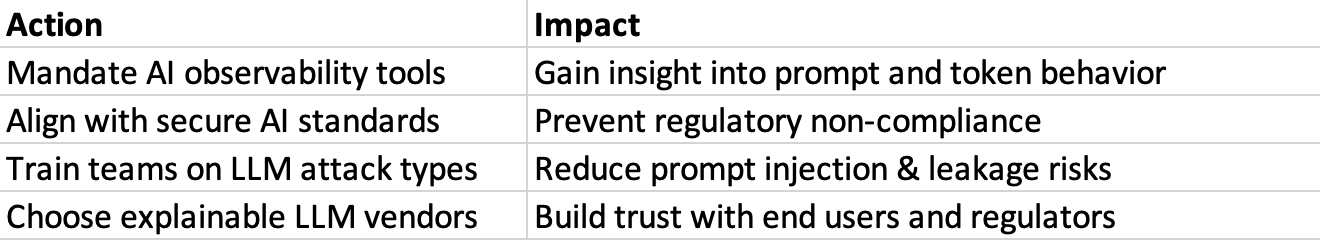

In my latest whitepaper, I explore how enterprises can shift from blind faith to measurable trust in AI by adopting a Secure Box approach:

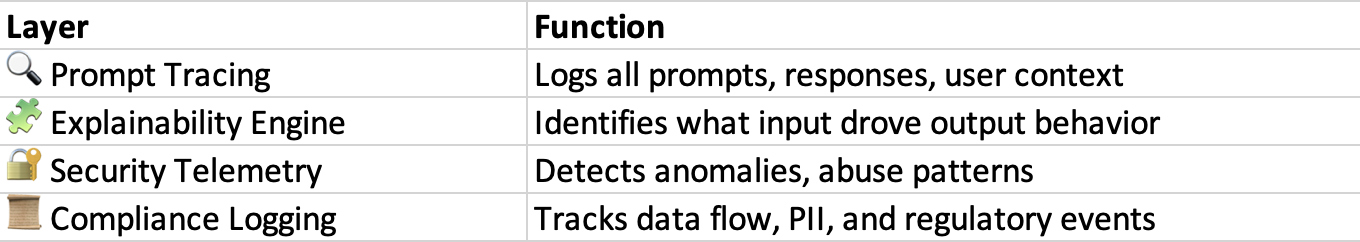

Wrap LLMs with observability, explainability, and continuous monitoring not just guardrails, but glass walls.

No traceability = No audit trail

No visibility = No defense against hallucinations or data leakage

No accountability = No compliance

Real-world risks of black-box AI (incl. Bing Chat/Sydney)

Secure Box architecture for enterprise AI

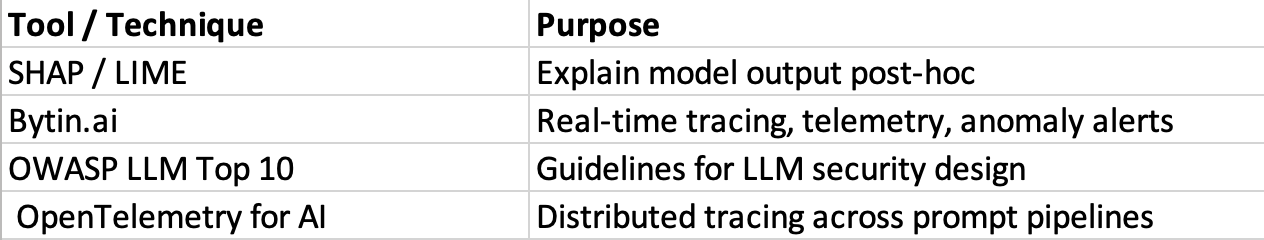

Tools and frameworks to build secure, transparent LLM pipelines

What it means to shift left for prompt security

Red teaming, telemetry, and anomaly detection for LLMs

Large language models (LLMs) drive a wave of innovation, but their inner workings remain a mystery to most users. Often called “black boxes”, these models generate impressive results without offering insight into how or why those results were produced.

This lack of transparency isn’t just a UX flaw, it’s a security risk. When you can’t trace decisions or detect anomalies, your business is vulnerable to prompt injection, data leakage, hallucinations, and compliance breaches.

This whitepaper explores the shift toward Secure Box AI, a structured approach to wrapping LLMs with telemetry, explainability, and continuous monitoring to make them safe, transparent, and enterprise-ready.

LLMs like GPT-4, Claude, and Gemini process vast datasets to generate language, but their reasoning steps are not always visible.

“You can’t secure what you can’t see.”

RiskExample Use Case Prompt InjectionMalicious prompt changes LLM’s behavior HallucinationsLLM creates factually incorrect output Data LeakageLLM repeats or leaks sensitive training data Model ManipulationOutputs are manipulated via data poisoning Audit & Compliance GapsNo record of decisions, fails regulatory checks

Case Example: In early 2023, Microsoft’s Bing Chat (Sydney) displayed emotional, erratic, and non-factual behavior due to unsanitized prompts, raising alarms over how LLMs might be hijacked in customer-facing scenarios.

A Secure Box approach refers to embedding security, transparency, and compliance mechanisms around the LLM, not inside the model itself.

Think of it as a security observability layer wrapped around your AI, like DevSecOps but for LLMs.

Use Case: A SaaS platform deployed an LLM-based assistant. After embedding sector8.ai's telemetry engine:

AI models are powerful, but without security and transparency, they’re liabilities.

A Secure Box approach helps organizations adopt LLMs responsibly, building trust, accountability, and compliance into every interaction.