Built for Visibility. Designed for Trust.

Sector8.ai gives you full-stack observability and security for LLMs, so you can understand, monitor, and secure your AI/ML systems at every stage.

Sector8.ai gives you full-stack observability and security for LLMs, so you can understand, monitor, and secure your AI/ML systems at every stage.

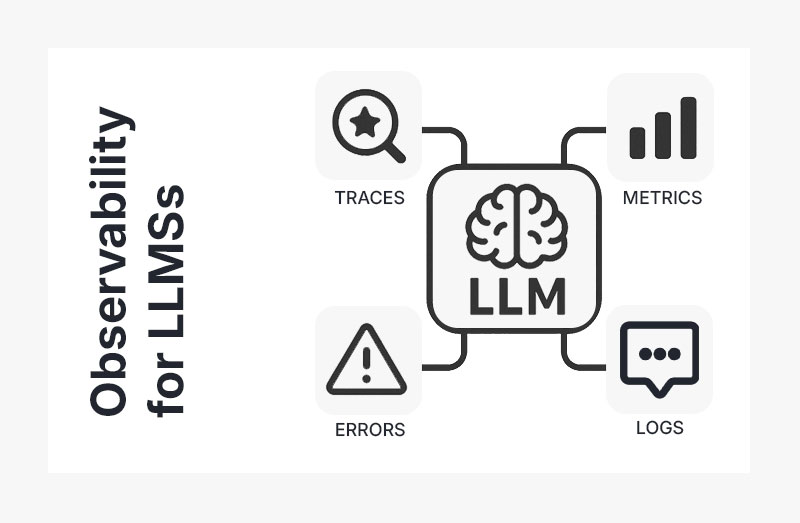

LLMs don’t come with a dashboard.

You don’t know what your users are prompting, how your models are responding, or when things go wrong, until they do.

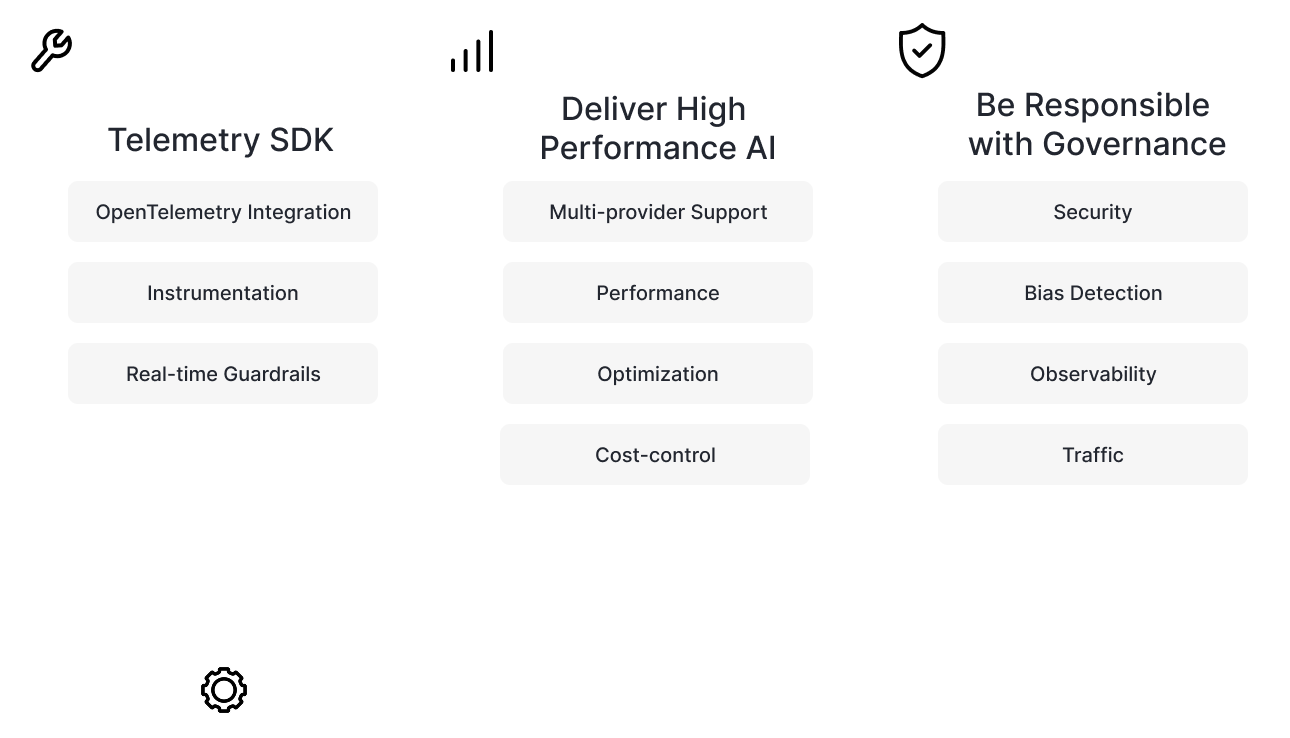

Lightweight OpenTelemetry-based SDK to collect and stream prompt-response traces in real time

Detects prompt injection, prompt chaining, data leakage, and other attack vectors

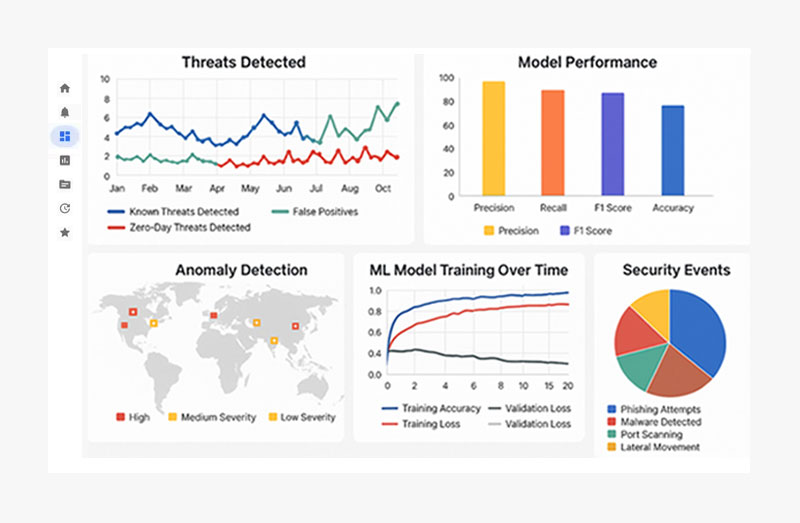

Flag unusual behaviour patterns using AI-driven pattern recognition

Tracks PII exposure, model responses, and risky prompts against GDPR & HIPAA

Enforces policies around model access, logging, and exposure control

Plug into your existing stack: Datadog, Grafana, OpenTelemetry, SIEM tools

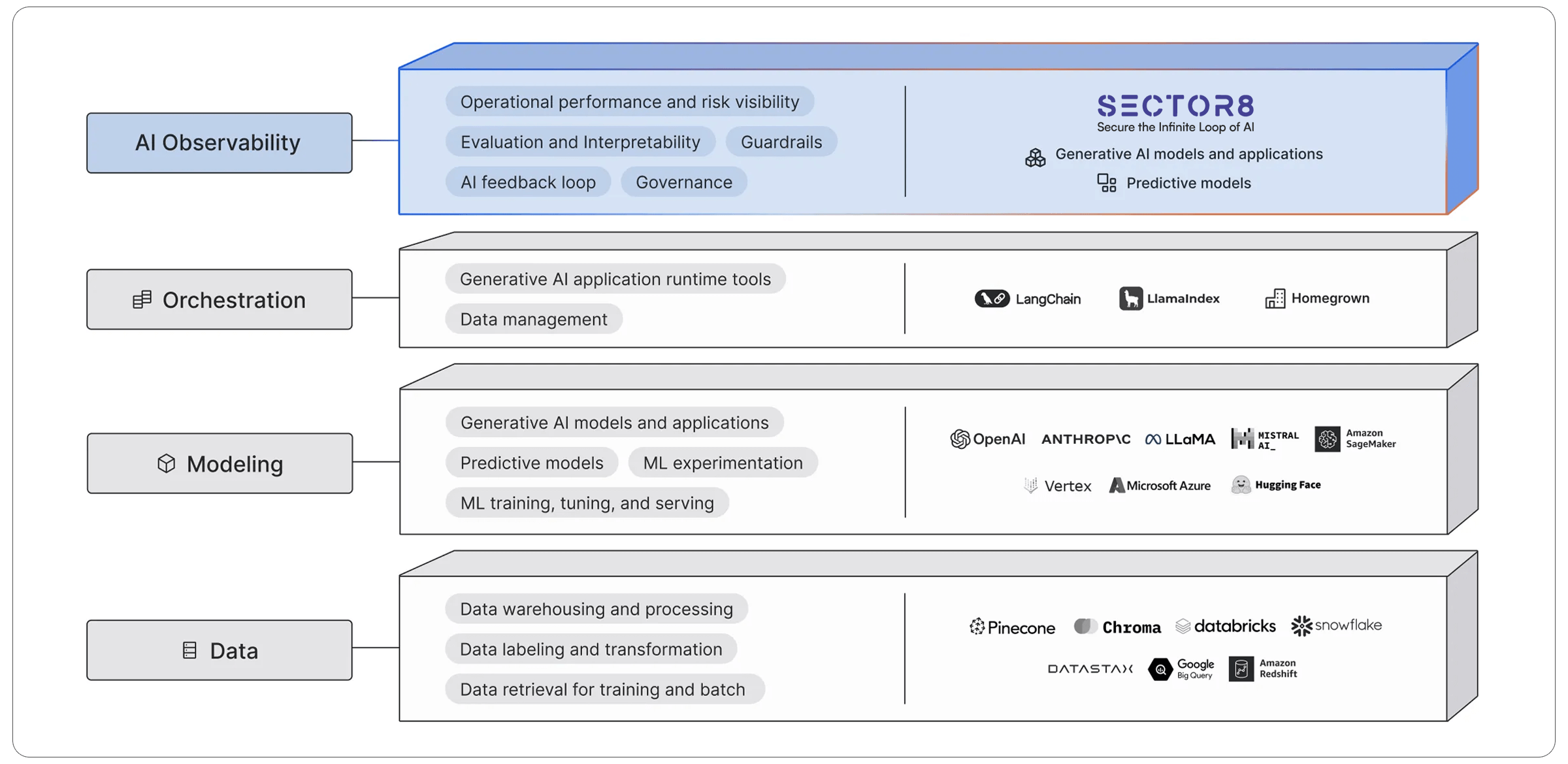

The MOOD stack is the new stack for LLMOps to standardize and accelerate LLM application development, deployment, and management. The stack comprises Modeling, AI/ML Observability, Orchestration, and Data layers.

Data privacy and AI/ML security are built into the foundation, not added as an afterthought.

Visibility + risk insights across LLM usage

Understand model behaviour & anomalies

Policy-ready logging & alerts

Safer LLMs embedded in customer workflows