OpenAI vs. DeepSeek - AI Model Theft Controversy

The OpenAI vs. DeepSeek AI controversy is more than a corporate dispute, it’s a defining moment for AI security. This incident exposes the fragility of AI intellectual property, the risks of open-access AI models, and the ineffectiveness of current AI regulations.

But let’s be clear: this won’t be the last case of AI model theft. If companies don’t act now to secure their models, enforce licensing protections, and demand stronger legal frameworks, we’re heading into an AI arms race with no rules and no boundaries.

This is where AI security needs to evolve, not just in protecting data, but in securing the intelligence itself. Because in the next phase of AI warfare, it’s not about who builds the best models, it’s about who can keep them safe.

1. Investigation – How It Started

- OpenAI, in collaboration with Microsoft, launched an internal probe after detecting unusual API activity linked to large-scale data extraction.

- DeepSeek, a Chinese AI startup, quickly gained traction with its AI model, raising suspicion about its rapid development and low reported training costs ($5.6M vs. OpenAI’s $100M+ per model).

- OpenAI accused DeepSeek of using “model distillation”, a process where AI models are trained using another model’s outputs to replicate its capabilities.

- The investigation revealed that DeepSeek may have used OpenAI’s API outputs to train its competing AI system, violating OpenAI’s terms of service.

2. What Happened? (The Allegation & The Fallout)

- Unauthorized Model Training: OpenAI claims DeepSeek used its ChatGPT outputs as training data, creating a competing AI model without direct access to OpenAI’s proprietary weights.

- API Scraping: Microsoft's security team flagged anomalous API usage, suggesting a DeepSeek-linked group extracted OpenAI’s model responses in bulk.

- Market Disruption: DeepSeek's rapid rise caused shockwaves in the AI market, triggering sell-offs in AI-related stocks.

- Tech Rivalry & Geopolitics: The U.S. government’s AI export controls failed to prevent China from developing competitive AI, raising concerns over regulatory loopholes.

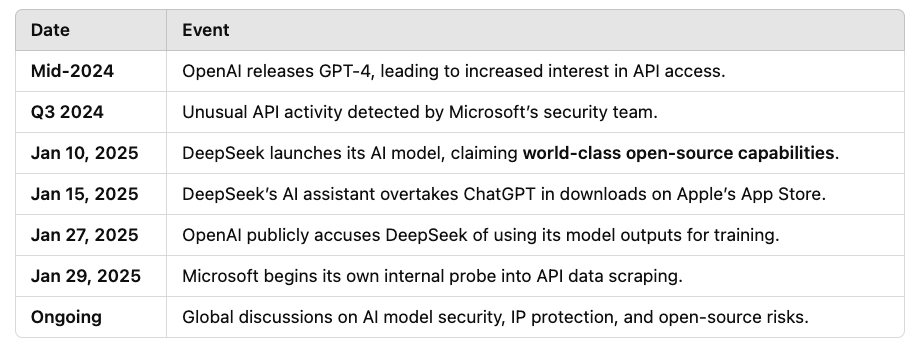

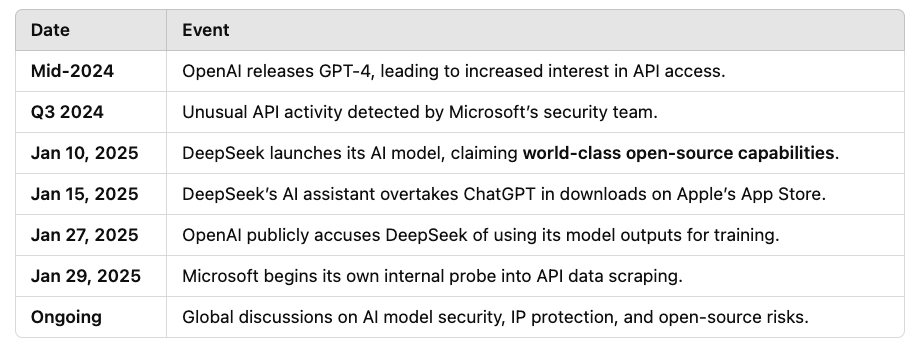

3. Timeline of Events

4. Gaps That Existed (How This Happened)

Weak API Security Controls

- OpenAI’s API lacked strict access monitoring to detect and block large-scale data extraction.

- No real-time anomaly detection or rate-limiting was in place to prevent excessive API queries.

Lack of AI IP Protection Laws

- AI models exist in a grey legal area, there are no clear global IP protection frameworks for AI-generated content.

- Model distillation is widely used but not explicitly illegal, making enforcement difficult.

Open-Source AI Risks

- DeepSeek leveraged open-source AI tools and combined them with extracted OpenAI data.

- This highlights the growing risk of open-source AI exploitation without licensing controls.

Failure of U.S. AI Export Controls

- Despite U.S. restrictions on AI chips, China still managed to develop competitive AI models using alternative methods.

- The case shows that AI knowledge transfer is harder to control than hardware exports.

5. Vulnerabilities Exploited (What Made This Possible?)

- API Vulnerability: OpenAI’s public API was used to mass-query responses, enabling DeepSeek to extract synthetic training data at scale.

- Regulatory Loophole: Since no global AI copyright laws exist, DeepSeek operated in a legal grey area, replicating model capabilities without directly copying OpenAI’s code.

- Business Model Exploitation: OpenAI’s freemium API access allowed external developers to interact with its model, making it easier to extract high-quality responses for training purposes.

- Geopolitical AI Race: The incident underscores how nations are racing to dominate AI, China’s DeepSeek circumvented U.S. AI restrictions by leveraging external data sources.

6. Lessons Learned & Actions Needed

For AI Companies:

- Stronger API Security: Implement real-time monitoring, rate limits, and AI fingerprinting to detect unauthorized data extraction.

- IP Protection Measures: Develop AI watermarking & legal licensing frameworks to prevent model misuse.

- Tighter Model Access Controls: Restrict large-scale API calls and introduce zero-trust authentication.

For Policymakers & Regulators:

- Global AI Copyright Frameworks: Establish clear legal guidelines for AI model training and use of synthetic data.

- Stronger AI Trade Regulations: Reassess export controls, focusing not just on hardware but knowledge transfer mechanisms.

For Enterprises & AI Users:

- AI Vendor Due Diligence: Companies must vet AI providers for data protection & IP compliance before integration.

- AI Security Awareness: IT teams should track AI API usage and detect anomalies in AI model behaviour.

Final Thoughts: The AI Arms Race & Future Risks

This incident sets a dangerous precedent, it shows that AI models can be reverse-engineered through indirect methods, raising concerns for all AI companies The AI industry must act fast to implement stronger security, IP protections, and ethical AI governance before model theft becomes widespread. Expect more AI trade disputes as China and the U.S. battle for AI supremacy, potentially leading to stricter AI regulations worldwide.

What do you think? Are we prepared for this new reality, or is AI security still playing catch-up? Let’s continue this conversation.